Does dwell time really matter for SEO?

What’s the actual impression of machine studying on search engine optimization? This has been one of many largest debates inside search engine marketing during the last yr.

Please word, this text was initially revealed on the Wordstream weblog; it’s reprinted with permission.

I gained’t lie: I’ve turn into a bit obsessive about machine studying. My concept is that RankBrain and/or different machine studying parts inside Google’s core algorithm are more and more rewarding pages with excessive consumer engagement.

Basically, Google needs to seek out unicorns – pages which have extraordinary consumer engagement metrics like natural search click on-via price (CTR), dwell time, bounce fee, and conversion price – and reward that content material with greater natural search rankings.

Happier, extra engaged customers means higher search outcomes, proper?

So, primarily, machine studying is Google’s Unicorn Detector.

Machine Learning & Click-Through Rate

Many search engine marketing specialists and influencers have stated that it’s completely unattainable to seek out any proof of Google RankBrain within the wild.

That’s ridiculous. You simply have to run search engine marketing experiments and be smarter about the way you conduct these experiments.

That’s why, prior to now, I ran an experiment that checked out CTR over time. I hoped to seek out proof of machine studying.

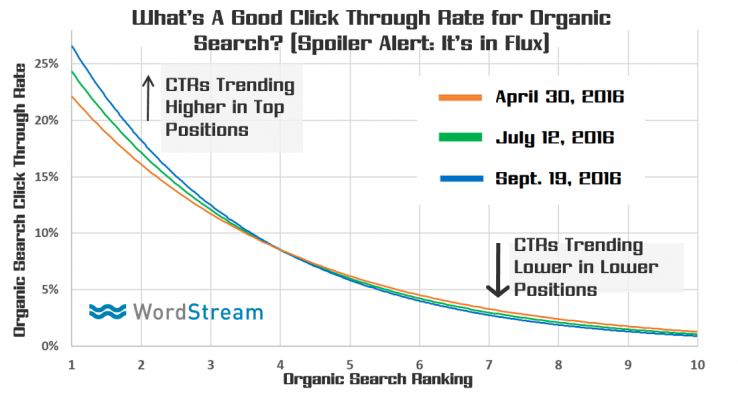

What I discovered: outcomes which have larger natural search CTRs are getting pushed greater up the SERPs and getting extra clicks:

Click-by way of price is only one option to see the impression of machine studying algorithms. Today, let’s take a look at one other essential engagement metric: lengthy clicks, or visits that keep on website for a very long time after leaving the SERP.

Time on Site Acts as a Proxy for Long Clicks

Are you not satisfied that lengthy clicks influence natural search rankings (whether or not instantly or not directly)? Well, I’ve provide you with an excellent straightforward approach which you could show to your self that the lengthy click on issues – whereas additionally revealing the impression of machine studying algorithms.

In at the moment’s experiment, we’re going to measure time on web page. To be clear: time on web page isn’t the identical as dwell time or an extended click on (or, how lengthy individuals keep in your website earlier than they hit the again button to return to the search outcomes from which they discovered you).

We can’t measure lengthy clicks or dwell time in Google Analytics. Only Google has entry to this knowledge.

Time on web page actually doesn’t matter to us. We’re solely taking a look at time on web page as a result of it is rather probably proportional to these metrics.

Time on Site & Organic Traffic (Before RankBrain)

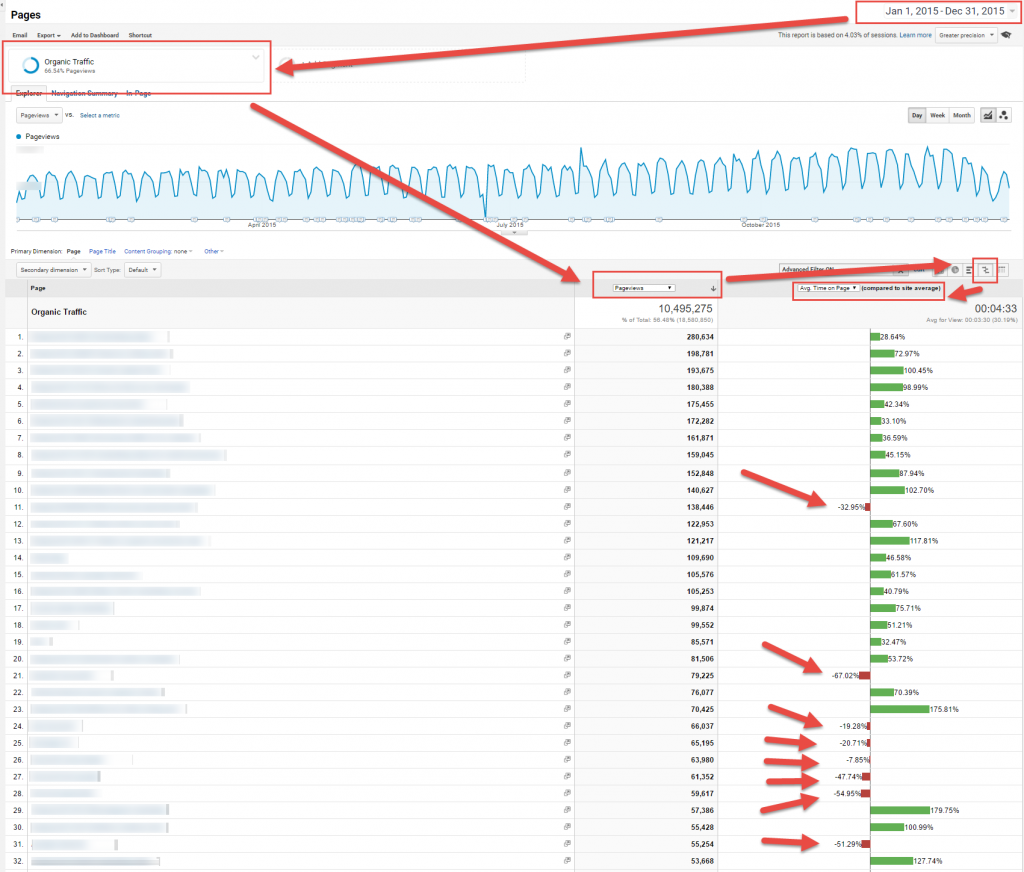

To get began, go into your analytics account. Pick a time-frame earlier than the brand new algorithms have been in play (i.e., 2015).

Segment your content material report back to view solely your natural visitors, after which type by pageviews. Then you need to run a Comparison Analysis that compares your pageviews to common time on web page.

You’ll see one thing like this:

These 32 pages drove our most natural visitors in 2015. Time on website is above common for about two-thirds of those pages, however it’s under common for the remaining third.

See all these pink arrows? Those are donkeys – pages that have been rating properly in natural search, however in all truthfully in all probability had no enterprise rating nicely, at the very least for the search queries that have been driving probably the most visitors. They have been out of their league. Time on web page was half or a 3rd of the location common.

Time on Site & Organic Traffic (After RankBrain)

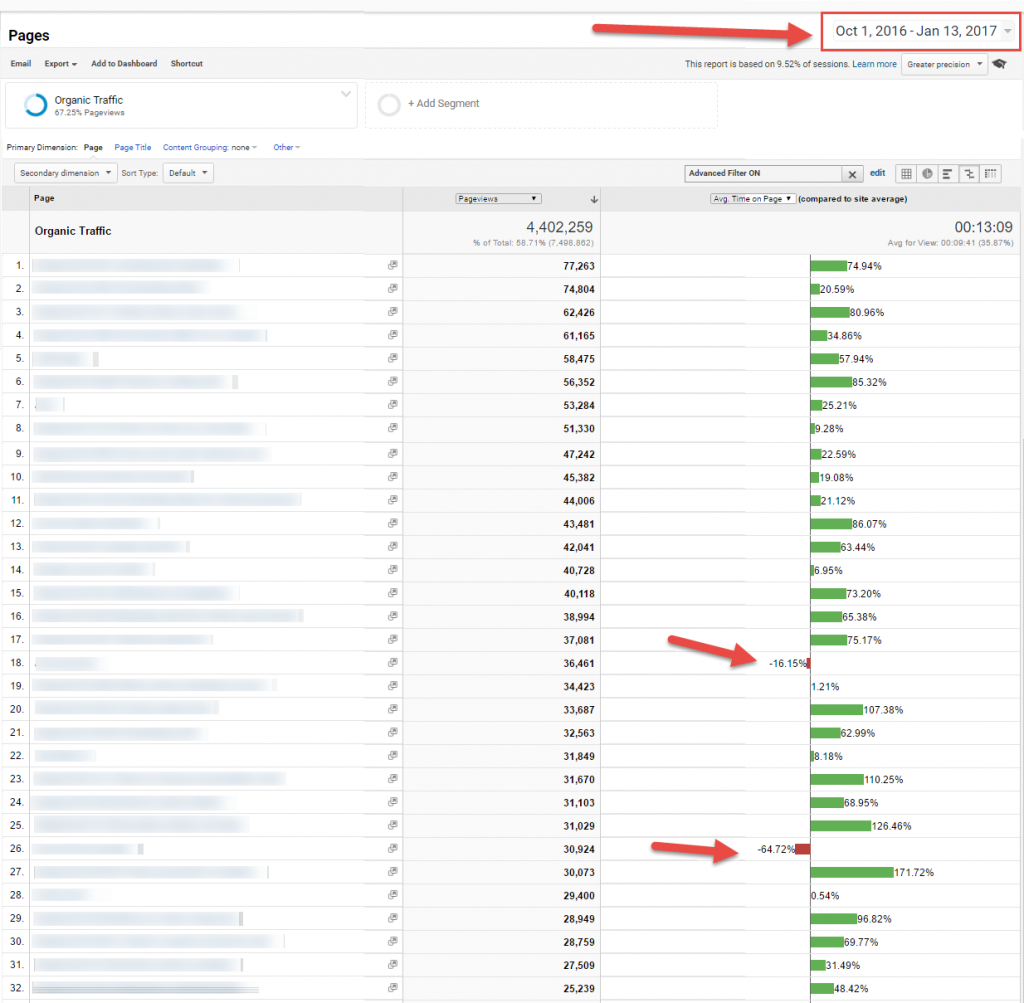

Now let’s do the identical evaluation. But we’re going to make use of a newer time interval once we know Google’s machine studying algorithms have been in use (e.g., the final three or 4 months).

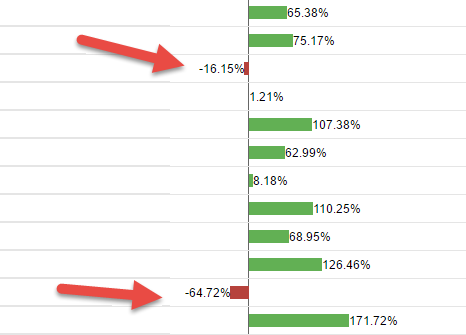

Do the identical comparability evaluation. You’ll see one thing like this:

Look at what occurs now once we analyze the natural visitors. All however two of our prime pages have above common time on web page.

This is type of superb to see. So what’s occurring?

Does Longer Dwell Time = Higher Search Rankings?

It appears that Google’s machine studying algorithms have seen by means of all these pages that used to rank nicely in 2015, however actually didn’t need to be rating properly. And, to me, it definitely appears like Google is rewarding larger dwell time with extra outstanding search positions.

Google detected a lot of the donkeys (about eighty % of them!) and now almost all of the pages with probably the most natural visitors are time-on-website unicorns.

I gained’t inform you which pages on the WordStream website these donkeys are, however I will inform you that a few of these pages have been created merely to usher in visitors (mission: profitable), and the alignment with search intent wasn’t nice. Probably somebody created a web page that matched the intent higher.

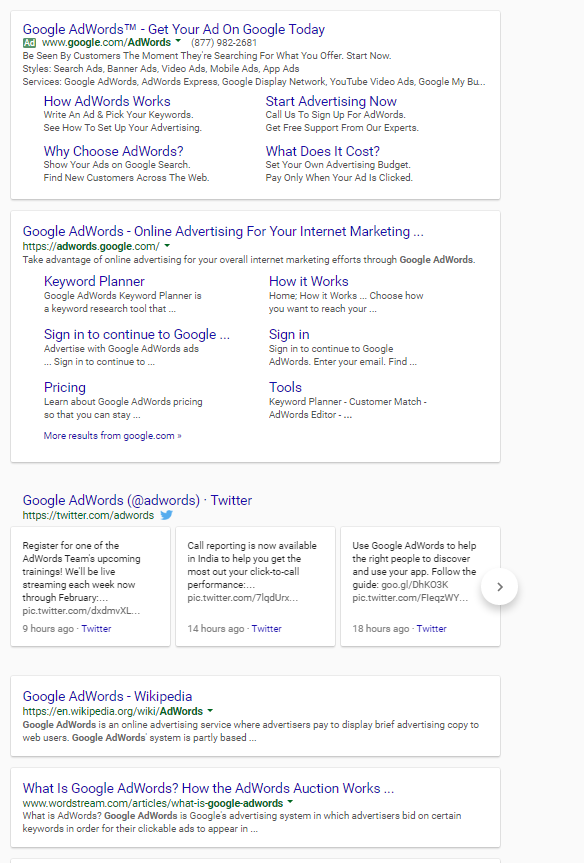

In reality, right here’s one instance: Our AdWords Grader used to rank on web page B for the question “google adwords” (which has large search quantity – over 300,000 searches a month!). The intent match there’s low – most individuals who seek for that phrase are simply utilizing it as a navigational key phrase, to get to the AdWords website.

A small proportion of these searchers may simply need to know extra about Google AdWords and what it’s, type of utilizing Google as a option to discover a Wikipedia web page. There’s no indication from the question that they may be on the lookout for a device to assist them diagnose AdWords issues. And guess what? In 2015, the Grader web page was a type of prime 30 pages, nevertheless it had under common time on website.

So sooner or later, Google examined a unique outcome instead of the Grader – our infographic about how Google AdWords works. It’s nonetheless rating on web page M for that search question, and it matches the informational intent of the key phrase a lot better.

A Few Caveats on the Data

To be clear, I know these analytics reviews don’t instantly present a loss in rankings. There are different potential explanations for the variations within the studies – perhaps we created plenty of new, tremendous-superior content material that’s rating for greater-quantity key phrases they usually merely displaced the time-on-website “donkeys” from 2015. Further, there are specific kinds of pages which may have low time on website for a wonderfully acceptable cause (for instance, they could present the data the consumer needs in a short time).

But internally, we all know for positive that a number of of our pages that had under common time on website have fallen within the rankings (once more, a minimum of for sure key phrases) prior to now couple of years.

And regardless, it’s very compelling to see that the pages which might be driving probably the most natural visitors general (the WordStream website has hundreds of pages) have method above common time on website. It strongly means that pages with wonderful, unicorn-degree engagement metrics are going to be probably the most invaluable to your corporation general.

This report additionally revealed one thing ridiculously necessary for us: the pages with under common time on website are our most weak pages when it comes to search engine optimization. In different phrases, these two remaining pages within the second chart above that also have under common time on website are those which might be probably to lose natural rankings and visitors within the new machine studying world.

What’s so nice about this report is you don’t should do a whole lot of analysis or rent an search engine marketing to do an in depth audit. Just open up your analytics and take a look at the info your self. You ought to be capable of examine modifications from a very long time in the past to current historical past (the final three or 4 months).

What Does It All Mean?

This report is principally your donkey detector. It will present you the content material that could possibly be most weak for future incremental visitors and search rankings losses from Google.

That’s how machine studying works. Machine studying doesn’t get rid of all of your visitors in a single day (like a Panda or Penguin). It’s gradual.

What do you have to do when you’ve got numerous donkey content material?

Prioritize the pages which are most in danger – these which might be under common or close to common. If there’s not a very good purpose for these pages to have under common time on website, put these at prime of your listing for rewriting or fixing up in order that they align higher with consumer intent.

Now go take a look at your personal knowledge and see in case you agree that point-on-website performs a task in your natural search rankings.

Don’t simply take my phrase for it. Go look: run your personal studies and let me know what you discover.