Imitating search algorithms for a successful link building strategy

When doing a hyperlink constructing technique – or another sort of search engine marketing-associated technique, for that matter – you may discover the sheer quantity of knowledge that we’ve got obtainable to us at a comparatively low value, fairly staggering.

However, that may typically create an issue in itself; choosing the right knowledge to make use of, after which utilizing it in such a approach that makes it helpful and actionable, is usually a lot harder.

Creating a hyperlink constructing technique and getting it proper is important – hyperlinks are inside the prime two rating alerts for Google.

This could seem apparent, however to make sure you are constructing a hyperlink technique that’s going to profit from this main rating sign and be used on an ongoing foundation, you want to do extra than simply present metrics in your rivals. You ought to be offering alternatives for hyperlink constructing and PR that may information you on the websites you have to purchase to offer probably the most profit.

To us at Zazzle Media, the easiest way to do that is by performing a big-scale competitor hyperlink intersect.

Rather than simply displaying you easy methods to implement a big scale hyperlink intersect, which has been executed many occasions earlier than, I’m going to elucidate a better means of doing one by mimicking nicely-recognized search algorithms.

Doing your hyperlink intersect this manner will all the time return higher outcomes. Below are the 2 algorithms we might be making an attempt to duplicate, together with a brief description of each.

Topic-delicate PageRank

Topic-delicate PageRank is an advanced model of the PageRank algorithm that passes a further sign on prime of the normal authority and reliable scores. This further sign is topical relevance.

The foundation of this algorithm is that seed pages are grouped by the subject to which they belong. For instance, the sports activities part of the BBC website can be categorised as being about sport, the politics part about politics, and so forth.

All exterior hyperlinks from these elements of the location would then cross on sport or politics-associated topical PageRank to the linked website. This topical rating would then be handed across the net by way of exterior hyperlinks identical to the normal PageRank algorithm would do authority.

You can learn extra about matter-delicate PageRank on this paper by Taher Haveliwala. Not lengthy after writing it he went onto develop into a Google software engineer. Matt Cutts additionally talked about Topical PageRank on this video right here.

Hub and Authority Pages

The concept of the web being filled with hub (or skilled) and authority pages has been round for fairly some time, and Google can be utilizing some type of this algorithm.

You can see this matter being written about on this paper on the Hilltop algorithm by Krishna Bharat or Authoritative Sources in a Hyperlinked Environment by Jon M. Kleinberg.

In the primary paper by Krishna Bharat, an professional web page is outlined as a ‘web page that’s a few sure matter and has hyperlinks to many non-affiliated pages on that matter’.

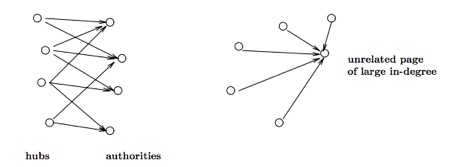

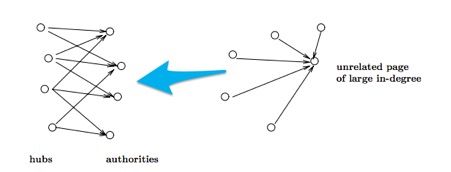

A web page is outlined as an authority if ‘a number of the greatest specialists on the question matter level to it’. Here is a diagram from the Kleinberg paper that exhibits a diagram of hubs and authorities after which additionally unrelated pages linking to a website:

We shall be replicating the above diagram with backlink knowledge afterward!

From this paper we will collect that to turn into an authority and rank properly for a specific time period or matter, we ought to be on the lookout for hyperlinks from these skilled/hub pages.

We want to do that as these websites are used to determine who’s an authority and must be rating properly for a given time period. Rather than replicating the above algorithm on the web page degree, we’ll as an alternative be doing it on the area degree. Simply as a result of hub domains usually tend to produce hub pages.

Relevancy as a Link Signal

You in all probability observed each of the above algorithms are aiming to do very comparable issues with passing authority relying on the relevance of the supply web page.

You will discover comparable goals in different hyperlink based mostly search algorithms together with phrase-based mostly indexing. This algorithm is barely past the scope of this weblog submit, but when we get hyperlinks from the hub websites we also needs to be ticking the field to profit from phrase-based mostly indexing.

If something, studying about these algorithms must be influencing you to construct relationships with topically related authority websites to enhance rankings. Continue under to seek out out precisely methods to discover these websites.

B – Picking your goal matter/key phrases

Before we discover the hub/skilled pages we’re aiming to get hyperlinks from, we first want to find out which pages are our authorities.

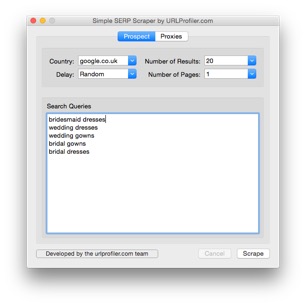

This is straightforward to do as Google tells us which website it deems an authority inside a subject in its search outcomes. We simply have to scrape search outcomes for the highest websites rating for associated key phrases that you simply need to enhance rankings for. In this instance we’ve chosen the next key phrases:

- bridesmaid clothes

- wedding ceremony clothes

- wedding ceremony robes

- bridal robes

- bridal clothes

For scraping search outcomes we use our personal in-home software, however the Simple SERP Scraper by the creators of URL Profiler will even work. I advocate scraping the highest 20 outcomes for every time period.

P – Finding your authorities

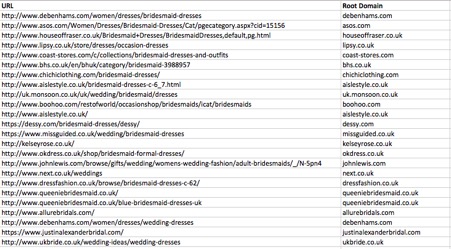

You ought to now have an inventory of URLs rating for our goal phrases in Excel. Delete some columns so that you’ve simply the URL that’s rating. Now, in column B, add a header referred to as ‘Root Domain’. In cell B2 add the next components:

=IF(ISERROR(FIND(“//www.”,A2)), MID(A2,FIND(“:”,A2,A)+A,FIND(“/”,A2,N)-FIND(“:”,A2,A)-A), MID(A2,FIND(“:”,A2,A)+S,FIND(“/”,A2,N)-FIND(“:”,A2,A)-S))

Expand the outcomes downwards so that you’ve the basis area for each URL. Your spreadsheet ought to now seem like this:

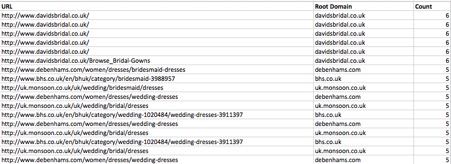

Next add one other heading in column A referred to as ‘Count’ and in C2 add and drag down the next method:

=COUNTIF(S:C,B2)

This will simply rely what number of occasions that area is displaying up for the key phrases we scraped. Next we have to copy column A and paste as a worth to take away the components. Then simply type the desk utilizing column H from largest to smallest. Your spreadsheet ought to now seem like this:

Now we simply have to take away the duplicate domains. We do that by going into the ‘Data’ tab within the ribbon on the prime of Excel and choosing take away duplicates. Then simply take away duplicates on column S. We can even delete column A so we simply have our root domains and the variety of occasions the area is discovered inside the search outcomes.

We now have the domains that Google believes to be an authority on the marriage clothes matter.

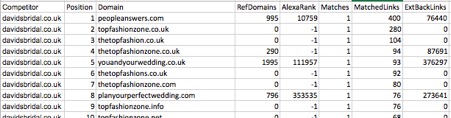

A – Export referring domains for authority websites

We often use Majestic to get the referring domains for the authority websites – primarily as a result of they’ve an in depth database of hyperlinks. Plus, you get their metrics corresponding to Citation Flow, Trust Flow in addition to Topical Trust Flow (extra on Topical Trust Flow later).

If you need to use Ahrefs or one other service, you would use a metric that they supply that’s just like Trust Flow. You will, nevertheless, miss out on Topical Trust Flow. We’ll make use of this afterward.

Pick the highest domains with a excessive rely from the spreadsheet we have now simply created. Then enter them into Majestic and export the referring domains. Once we have now exported the primary area, we have to insert an empty column in column A. Give this column a header referred to as ‘Competitor’ after which enter the basis area in A2 and drag down.

Repeat this course of and transfer onto the subsequent competitor, besides this time copy and paste the brand new export into the primary sheet we exported (excluding the headers) so we’ve all of the backlink knowledge in a single sheet.

I advocate repeating this till you’ve gotten no less than ten rivals within the sheet.

A – Tidy up the spreadsheet

Now that we now have all of the referring domains we’d like, we will clear up our spreadsheet and take away pointless columns.

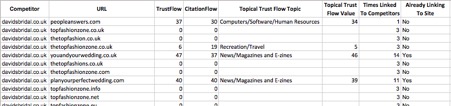

I often delete all columns besides the competitor, area, TrustFlow, CitationFlow, Topical Trust Flow Topic zero and Topical Trust Flow Value zero columns.

I additionally rename the area header to be ‘URL’, and tidy up the Topical Trust Flow headers.

![]()

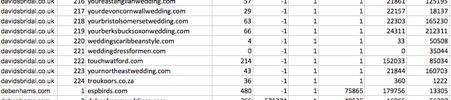

H – Count repeat domains and mark already acquired hyperlinks

Now that we have now the info, we have to use the identical components as earlier to spotlight the hub/professional domains which might be linking to a number of topically related domains.

Add a header referred to as ‘Times Linked to Competitors’ in column M and add this components in G2:

=COUNTIF(S:B,B2)

This will now inform you what number of rivals the location in column S is linking to. You may even need to mark domains which might be already linking to your website so we aren’t constructing hyperlinks on the identical area a number of occasions.

To do that, firstly add a heading in column T referred to as ‘Already Linking to Site?’. Next, create a brand new sheet in your spreadsheet referred to as ‘My Site Links’ and export all of your referring domains from Majestic on your website’s area. Then paste the export into the newly created sheet.

Now, in cell H2 in our first sheet, add the next formulation:

=IFERROR(IF(MATCH(B2,’My Site Links’!S:O,zero),”Yes”,),”No”)

This checks if the URL in cell B2 is in column C of the ‘My Site Links’ hyperlinks sheet and returns sure or no relying on the end result. Now copy columns M and T and paste them as values, simply to take away the formulation once more.

In this instance, I have added ellisbridals.co.uk as our website.

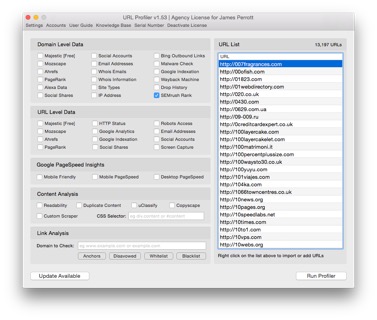

S – Organic visitors estimate (optionally available)

This step is solely non-compulsory however at this level often I may also pull in some metrics from SEMrush.

I like to make use of SEMrush’s Organic Traffic metric to provide an extra indication of how properly a website is rating for its goal key phrases. If it isn’t rating very properly or has a low natural visitors rating, this a reasonably good indication the location has both been penalised, de-listed or is simply low high quality.

Move onto step H if you don’t want to do that.

To get this info from SEMrush, you should use URL Profiler. Just save your spreadsheet as a CSV, proper click on within the URL record space in URL Profiler then import CSV and merge knowledge.

Next, that you must tick ‘SEMrush Rank’ within the area degree knowledge, enter your API key, then run the profiler.

If you’re operating this on a big set of knowledge and need to velocity up amassing the SEMrush metrics, I will typically take away domains with a Trust Flow rating of zero – H in Excel earlier than importing. This is simply to get rid of the majority of the low-high quality untrustworthy domains that you don’t want to be constructing hyperlinks from. It additionally saves some SEMrush API credit!

S – Clean-up URL profiler output (nonetheless elective)

Now we’ve got the brand new spreadsheet that features SEMrush metrics, you simply have to clear up the output within the mixed outcomes sheet.

I will often take away all columns which were added by URL Profiler and simply depart the brand new SEMrush Organic Traffic.

H – Visualise the info utilizing a community graph

I often do steps H, N, 10, so the info is extra presentable fairly than having the top product as only a spreadsheet. It additionally makes filtering the info to seek out your excellent metrics simpler.

These are very fast and straightforward to create utilizing Google Fusion Tables, so I advocate doing them.

To create them save your spreadsheet as a CSV file after which go to create a brand new file in Google Drive and choose the ‘Connect More Apps’ choice. Search for ‘Fusion Tables’ after which join the app to your Drive account.

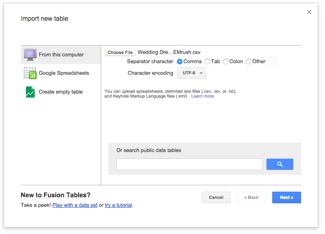

Once that’s carried out, create a brand new file in Google Drive and choose Google Fusion Tables. We then simply have to add our CSV file from our pc and choose subsequent within the backside proper.

After the CSV has been loaded, you’ll have to import the desk by clicking subsequent once more. Give your desk a reputation and choose end.

N – Create a hub/skilled community graph

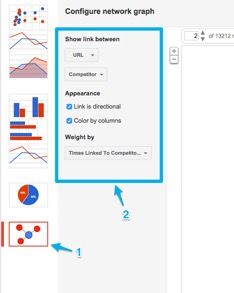

Now the rows are imported we have to create our community graph by clicking on the purple + icon after which ‘add chart’.

Next, select the community graph on the backside and configure the graph with the next settings:

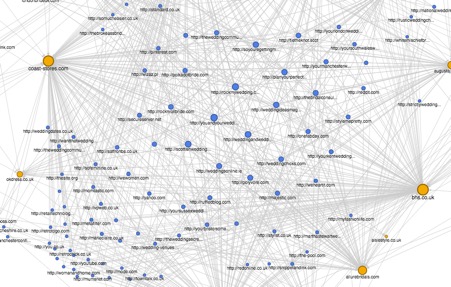

Your graph ought to now look one thing like under. You may have to extend the variety of nodes it exhibits so extra websites start to point out up. Be aware that the extra nodes there are, the extra demanding it’s in your pc. You may also simply want to pick ‘carried out’ within the prime proper nook of the chart so we’re not configuring it.

If you haven’t already figured it out, the yellow circles on the chart are our rivals; the blue circles are the websites linking to our rivals. The greater the rivals circle, the extra referring domains they’ve. The linking website circles get greater relying on how a lot of a hub area it’s. This is as a result of, when establishing the chart, we weighted it by the variety of occasions linking to our rivals.

While the above graph seems to be fairly nice, we now have various websites in it that match the ‘unrelated web page of huge in-diploma’ categorisation talked about within the Kleinberg paper earlier as they solely hyperlink to at least one authority website.

We need to be turning the diagram on the fitting into the diagram on the left:

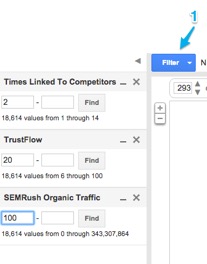

This is actually easy to do by including the the under filters.

Filtering so solely websites who hyperlink to multiple competitor will present solely hub/skilled domains; filtering by TrustFlow and SEMrush Organic Traffic removes decrease high quality untrustworthy domains.

You might want to mess around with the Trust Flow and SEMrush Organic Traffic metrics relying on the websites you are attempting to focus on. Our chart has now gone right down to 422 domains from thirteen,212.

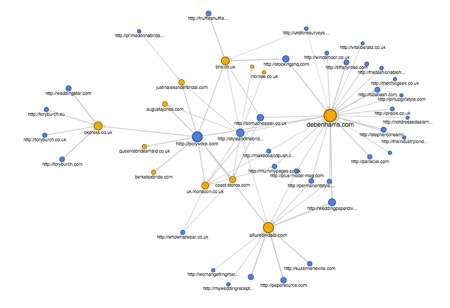

If you need to, at this level you can even add one other filter to solely present websites that aren’t already linking to your website. Here is what our chart now seems to be like:

The above chart is now much more manageable. You can see our prime hub/professional domains we need to be constructing relationships with floating across the center of the chart. Here is an in depth-up of a few of these domains:

Your PR/Outreach group ought to now have lots to be getting on with! You can see the outcomes are fairly good, with heaps of wedding ceremony associated websites that you must begin constructing relationships with.

10 – Filter to point out pages that may cross excessive topical PageRank

Before we get into creating this graph, I am first going to elucidate why we will substitute Topical Trust Flow by Majestic for Topic-delicate PageRank.

Topical Trust Flow works in a really comparable strategy to Topic-delicate PageRank in that it’s calculated by way of a guide evaluation of a set of seed websites. Then this topical knowledge is propagated all through all the net to provide a Topical Trust Flow Score for each web page and area on the web.

This provides you a good suggestion of what matter a person website is an authority on. In this case, if a website has a excessive Topical Trust Flow rating for a marriage associated topic, we would like a few of that wedding ceremony associated authority to be handed onto us by way of a hyperlink.

Now, onto creating the graph. Since we all know how these graphs work, doing this must be so much faster.

Create one other community graph simply as earlier than, besides this time weight it by Topical Trust Flow worth. Create a filter for Topical Trust Flow Topic and decide any subjects associated to your website.

For this website, I have chosen Shopping/Weddings and Shopping/Clothing. I often additionally use an analogous filter to the earlier chart for Trust Flow and natural visitors to stop displaying any low-high quality outcomes.

Fewer outcomes are returned for this chart, however if you need extra authority handed inside a subject, these are the websites you need to be constructing relationships with.

You can mess around with the totally different subjects relying on the websites you need to attempt to discover. For instance, you might need to discover websites inside the News/Magazines and D-Zines matter for PR.

eleven – Replicate Filters in Google Sheets

This step could be very easy and doesn’t want a lot rationalization.

I import the spreadsheet we created earlier into Google Sheets after which simply duplicate the sheet and add the identical filters as those I created within the community graphs. I often additionally add an ‘Outreached?’ header so the staff is aware of if we now have an present relationship with the location.

I advocate doing this, as whereas these charts look nice and visualise your knowledge in a flowery approach, it helps with monitoring which websites you’ve got already spoken to.

Summary

You ought to now know what it is advisable be doing on your website or shopper to not simply drive extra hyperlink fairness into their website, but in addition drive topical related hyperlink fairness that may profit them probably the most.

While this appears to be an extended course of, they don’t take that lengthy to create – particularly once you examine the profit the location will obtain from them.

There are far more makes use of for Network Graphs. I sometimes use them for visualising inner hyperlinks on a website to seek out gaps of their inner linking technique.

I would love to listen to some other concepts you’ll have to make use of those graphs, in addition to some other belongings you love to do when making a hyperlink constructing technique.

Sam Underwood is a Search and Data Executive at Zazzle and a contributor to Search Engine Watch.